I’ve been working with SQL Server for 18 years and over that long span I’ve seen a lot of different techniques for tuning and development.

There are essentially two principles of development, Code First and Database First. Naturally, being a life long database professional I’d say that Database First is the only way to properly approach a project. You’d start with a business analyst gathering requirements, build out the database ERD, and then start coding.

Building the tables in visual studio and thinking about the application code first is not right. It’s just outright wrong, right? If you haven’t read about migrations, you should. They are a really helpful way of deploying DDL and you can design the tables any which way you like. There’s not really a need for individual DDL scripts. One such example is FluentMigrator. I may follow up with more on this topic in another post. For now let’s get to something more interesting to us SQL folk.

Developers write SQL like a developer

I recently helped out with a .NET MVC project running on SQL Server 2016 where I found some pretty interesting stored procedures. I’ve seen a lot of really creative SQL but these were completely puzzling.

The database included many to many tables for customers who have addresses and phone numbers. A “mapping” table was created for the tables so they could map to a customer.

Normally you’d think a simple JOIN would suffice to get a list of addresses or phone numbers for a customer. These was done a way that I’ve never seen before.

Note that I modified the production code to run on my test system in AdventureWorks2012 and to anonymize the code.

First, a user defined table type was created.

CREATE TYPE [dbo].[BigIntArray] AS TABLE

(

[Id] [bigint] NOT NULL

)

Can you see where this is going? If you can you’ve probably already planted your palm firmly over your face.

Next, not one but two stored procedures were created.

— =============================================

— Author: <Author,,Name>

— Create date: <Create Date,,>

— Description: <Description,,>

— =============================================

CREATE PROCEDURE [Person].[Addresses_GetAddressesForPerson]

@Id BIGINT

AS

BEGIN

DECLARE @Ids [dbo].[BigIntArray]INSERT INTO @Ids

SELECT [AddressId]

FROM [Person].[BusinessEntityAddress]

WHERE [BusinessEntityId] = @IdEXEC [Person].[Addresses_GetAddressesByIds] @Ids

END

— =============================================

— Author: <Author,,Name>

— Create date: <Create Date,,>

— Description: <Description,,>

— =============================================

CREATE PROCEDURE [Person].[Addresses_GetAddressesByIds]

@Ids [dbo].[BigIntArray] READONLY

AS

BEGIN

SET NOCOUNT ON;SELECT [AddressId], [AddressLine1], [AddressLine2], [City], [StateProvinceId], [PostalCode], [ModifiedDate]

FROM [Person].[Address]

WHERE [AddressId] IN (SELECT [Id] FROM @Ids)

ORDER BY [AddressId] DESC

END

I always like when comments are in SQL code, especially when they are left completely untouched from their original template form. I’m sure Jen from MidnightDBA would like these comments.

How would I expect this to be written?

ALTER PROCEDURE Person.Addresses_Get_List

@BusinessEntityID int

ASSELECT A.AddressId, A.AddressLine1, A.AddressLine2, A.City, A.StateProvinceId, A.PostalCode, A.ModifiedDate

FROM Person.Address A

INNER JOIN Person.BusinessEntityAddress BEA ON (A.AddressID = BEA.AddressID)

WHERE P.BusinessEntityID = @BusinessEntityID

ORDER BY [AddressId] DESC

I’m always open to learning and understanding the benefits of different approaches, just like using c# to write the database DDL as mentioned above. Let’s compare the two methods of retrieving a list of addresses.

Let’s start with the existing production code

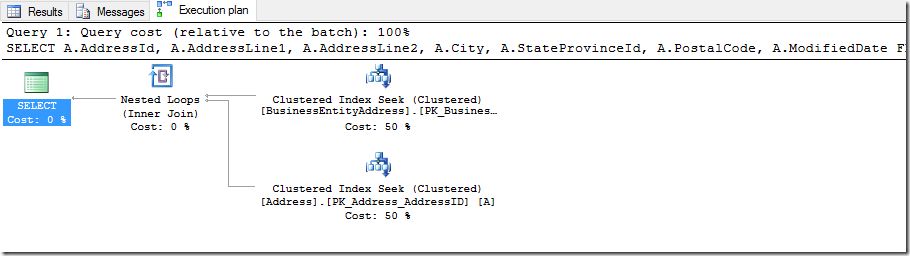

The existing production code produces the following query plan

The combined cost of the two queries is 0.0312159.

SET STATISTICS TIME results

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 252 ms.

SQL Server parse and compile time:

CPU time = 0 ms, elapsed time = 0 ms.

SET STATISTICS IO results

Table ‘#A9F65DFA’. Scan count 0, logical reads 1, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table ‘BusinessEntityAddress’. Scan count 1, logical reads 2, physical reads 2, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table ‘Address’. Scan count 0, logical reads 2, physical reads 2, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table ‘#A9F65DFA’. Scan count 1, logical reads 1, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

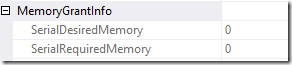

Memory grant data

Now let’s compare the other way

The cost of this query is 0.0065704.

SET STATISTICS TIME results

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 45 ms.

SQL Server parse and compile time:

CPU time = 0 ms, elapsed time = 0 ms.

SET STATISTICS IO results

Table ‘Address’. Scan count 0, logical reads 2, physical reads 2, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table ‘BusinessEntityAddress’. Scan count 1, logical reads 2, physical reads 2, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Memory grant data

Did we learn anything?

It’s clear that the production code costs much more and consumes more resources.

When the two procedures are run together we see that the query that uses a join costs only 17% of the total batch.

So what did we learn? SQL is a language that is often under estimated as being very simple. To query you just need “SELECT FROM WHERE”. I’ve run into some developers that characterize like this because it’s not a “real developer’s language”; yet, the people that feel this way have only scratched the surface. These are also often the same developers that mistake SQL to be object oriented and assume that everything will perform well no matter how a query is written. “Just throw more hardware at it” is often said; however, this is a really bad especially in Azure with the cost of compute.

Don’t get me wrong not all developers think this way or write SQL code as if it were object oriented.

As it turns out this was a conscious design decision made by the c# developer who wrote the procedures, in hopes of reducing future maintenance if any columns were added. The thought was that there would be less places to change code.

Recommendation?

A DBA can easily tune this query. The problem is it may not bubble to the top which may allow it to remain in production for some time.

If you don’t already have, I would recommend implementing a solid code review process for all application and database code, no matter how senior the developer is who wrote it. Code reviews can catch issues like this design before they move to production and institute learning for each of the developers involved in the process.

TFS has a built-in code review feature. If you use JIRA then Smartbear has a nice tool that integrates well. https://smartbear.com/product/collaborator/overview/

I’d also recommend that for the majority of projects, performance be considered first and foremost.

I personally got involved in a CODE First project last year, it was a semi-disaster in that data model keeps on changing at individual developer’s will, and huge performance hit. Long story short, the project is supposed to finish by end of 2016 April but now it is still on-going. I left the project after only a half year as I could not see the light of the project completion day.

LikeLike

I only recently reviewed a code first application. It’s interesting that regardless of the language behind the middle layer, code first applications very often and up in a mess. Developers rely on magical powers of an ORM, and turn to database specialists only after system meltdown.

LikeLike

[…] via Inside the mind of a c# developer — SQL TECH BLOG […]

LikeLike